- Key concepts:

- Web 2.0, nomenclature, neologisms, archaeologisms, spimes, blogjects, fabjects, blobjects, thinglinks, everyware, FlickR, Wikipedia, Jimmy Wales, Caterina Fake, ubiquitous computation, Marc Weiser, Tim O'Reilly, Alan Liu, Adam Greenfield, Ulla-Maaria Mutanen, Julian Bleecker, O'Reilly Media Emerging Technology Conference 2006

- Attention Conservation Notice:

- it's a Bruce Sterling speech at a California technology conference. Delivered at alpha-geek central, it may include indecipherable techie in-jokes. Well over 6,000 words. Includes illustrations.

Emerging Technology 2006

San Diego, CA

March 2006

Thanks for that kind introduction, Cory Doctorow. Hi, I'm Bruce Sterling. I write novels. Last time I was at an O'Reilly gig, I delivered a screed about open-source software.

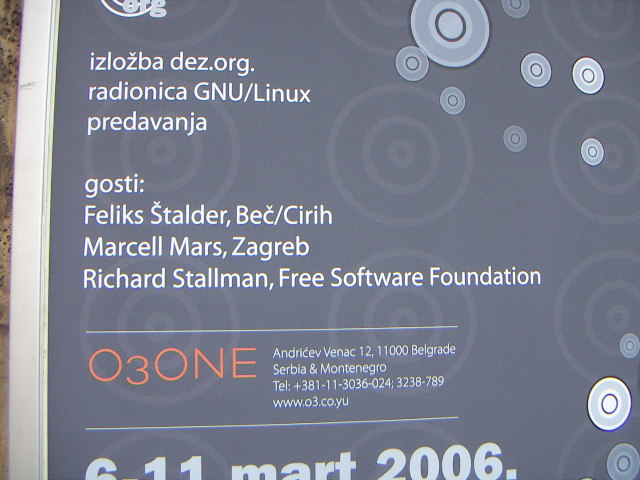

One of the things I said in that speech was that, some day, open-source people were going to become political dissidents. Yeah, I meant real dissidents, man, very 1989 style, very Eastern European... That speech was some years ago, of course... Today I'm actually living in Eastern Europe. I'm living in Belgrade and trying to get some novel-writing done in between fits of blogging... Just as I was leaving Belgrade to come here, Richard Stallman arrived in town. Yeah, it's Stallman, rms, Dr. GNU, he's there to rattle the skeletons in the closets of the outlaw state...

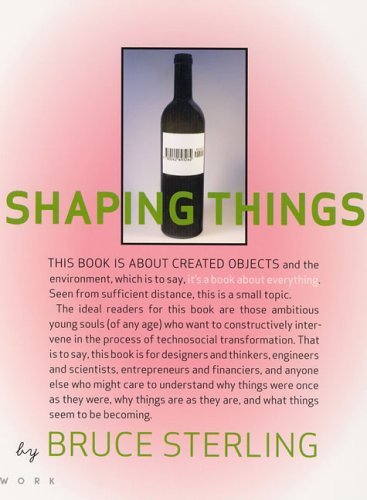

Who says sci-fi writers can't predict the future? My topic tonight is Internet of Things, a subject I've been lecturing about with grim regularity while trying to write a novel about the topic. Here's a book about it. This book, unfortunately, is not a science fiction novel. This is my little nonfiction MIT Press book. I finally got to the point where I had to write a design manifesto before I could write a novel.

I'm still a science fiction writer, so I'm very interested in things that can't happen yet. The Internet of Things can't happen yet. It is not emerging tech. It is a vast, slow, terrific tech thing trying to emerge. Web 2.0 is emerging tech, while the Internet of Things, if it happens at all, will probably take about thirty years. Because that is how long barcodes took to permeate society: 30 years.

My guess is that, in about 30 years, I might well wake up surrounded by electronic barcodes and I might well say, "Wow look, I'm in an Internet-of-Things situation here." With the drawback that in the year 2036, I'll be 82 years old. I may seriously require an Internet of Things then, because I'll be senile and/or near death. Those of us design visionaries who are hard at work in the Internet of Things field today, we're probably building the shelters for our own decline.

At the moment, we're eagerly debating the proper terminology for a future internet of things. This is a rather literary, language-centric speech tonight – as tech gigs go, anyway. Very wordy. If you want to talk Web 2.0, you can at least say, you know: "FlickR, " "Wikipedia." You might even meet Jimmy Wales or Caterina Fake, who are not futurists, but actual working web technologists. Web 2.0 is kind of a loose grab-bag of concepts, but Jimmy Wales isn't a loose grab-bag, Jimmy Wales physically exists. In the case of an Internet of Things or ubiquitous computation, the top guru in the field, Marc Weiser of Xerox PARC, has been dead for several years now. In the Internet of Things debate, people are still trying to find the loose verbal grab-bag just to put the concepts into. So I would argue that this work is basically a literary endeavour. When it comes to remote technical eventualities, you don't want to freeze the language too early. Instead, you need some empirical evidence on the ground, some working prototypes, something commercial, governmental, academic or military.... Otherwise you are trying to freeze an emergent technology into the shape of today's verbal descriptions. This prejudices people. It is bad attention economics. It limits their ability to find and understand the intrinsic advantages of the technology.

A good example of freezing the language too early is, I think, Artificial Intelligence. We very early got into the lasting bad habit of referring to computers as "thinking machines." I suspect this verbal metaphor seriously harmed technical development. Even the word "computing" sounds too much like human mathematical thinking. We might have made a much better language choice if we had called computers something like the French called them, ordinateurs, "ordinators."

Computers are not "smart," in any useful sense of that term. They don't "think." They don't have "intelligence." Computers don't "know" things and they don't have any literal "memories." They're not artificially intelligent sci-fi beings like HAL 9000. Computers are boxes of circuitry, with strings, and slots for the strings. They are not alive and mentally active, they are just sitting there, ordinating. What is "ordinating," exactly? Well, if we'd invested our attention in figuring that out, instead of awkwardly struggling to make these devices think like a human brain does, then we would have successfully explored the very large set of interesting problems that computers turned out to be really good at

.If you look at today's potent, influential computer technologies, say, Google, you've got something that looks Artificially Intelligent by the visionary standards of the 1960s. Google seems to "know" most everything about you and me, big brother: Google is like Colossus the Forbin Project. But Google is not designed or presented as a thinking machine. Google is not like Ask Jeeves or Microsoft Bob, which horribly pretend to think, and wouldn't fool a five-year-old child. Google is a search engine. It's a linking, ranking and sorting machine.

Linking, ranking and sorting don't sound very sexy, glamorous or philosophically crucial. Instead of nostalgically clinging to the words – the neologisms of the past, which are now archaeologisms – we should pay more attention to the facts on the ground. What works? What matters?

When I think about it: do I really WANT some classical Artificial Intelligence computer that can talk to me just like Alan Turing? Or do I prefer Google? Imagine two start-up companies. One of 'em has got Alan Turing's disembodied talking head inside a box, but no search engines. In the other company, they have no AI, but they get to use Google. Which company out-competes the other? One company asks: where do I find a cheap supplier? In response, they get a really genius math lecture by Alan Turing. Alan is really sincere about it, he's really thinking hard about the problem of supply, there inside his box. The other company has Google, so in about ten seconds they not only find a supplier but all kinds of massively popular links to other suppliers. Which company wins?

I guess you could argue that Alan Turing's super-smart metal head might invent Google, but do you need this roundabout approach? All it takes is a couple of grad-students to invent Google.

After doing that, the folksonomy kicks in, so you get all the linking and ranking and sorting. So, you can keep Alan Turing in the box, and I'll even throw in Richard Feynman. They can artificially think, while the rest of us will be getting on with the revolution.

Was it the words that misled us? I think it was, yeah. I think we could have done better words. Imagine that, back in the days of mainframe punch-card computers, some genius tech commentator had shown up. He looks at the early computer and he sensibly says: wait a minute. Even if there's like, Boolean logic going on here, this machine has got nothing to do with any actual thinking. This machine is clearly a big card shuffler. It's a linker, a stacker and a sorter. It's got these stiff paper cards and some of the cards have marks on them that can lead to other cards. So it's not best called a "thinking machine" or even a "computer," because doing sums is not the crucial issue here. This thing is best-described as something truly new. So I'll call it a "linksorter." No, it's a "tagshuffler." Maybe I'll just say that it ordinates.

A tech world that talked about ordinators, instead of Artificial Intelligence, probably would have produced Google in about 1980.

So language is of consequence. Those of us who make up words about these matters probably ought to do a better job. When I try to describe an Internet of Things, I find that the most refreshing and interesting and promising aspects are not based in older words, but in the present-day realities of computing. It's the new stuff that has emerged from massive human interaction with the technology. Classic computer science never talked about this much, because they just didn't imagine it.

In the past, they just didn't get certain things. For instance:

- the digital devices people carry around with them, such as laptops, media players, camera phones, PDAs.

- wireless and wired local and global networks that serve people in various locations as they and their objects and possessions move about the world.

- the global Internet and its socially-generated knowledge and Web-based, on-demand social applications.

This is a new technosocial substrate. It's not about intelligence, yet it can change our relationship with physical objects in the three-dimensional physical world. Not because it's inside some box trying to be smart, but because it's right out in the world with us, in our hands and pockets and laps, linking and tracking and ranking and sorting.

Doing this work, in, I think, six important ways:

- with interactive chips, objects can be labelled with unique identity – electronic barcoding or arphids, a tag that you can mark, sort, rank and shuffle.

- with local and precise positioning systems – geolocative systems, sorting out where you are and where things are.

- with powerful search engines – auto-googling objects, more sorting and shuffling.

- with cradle to cradle recycling – sustainability, transparent production, sorting and shuffling the garbage.

- 3d virtual models of objects – virtual design – cad-cam, having things present as virtual objects in the network before they become physical objects.

- rapid prototyping of objects – fabjects, blobjects, the ability to digitally manufacture real-world objects directly or almost directly from the digital plans.

Then there are two other new factors in the mix.

If objects had these six qualities, then people would interact with objects in an unprecedented way, a way so strange and different that we'd think about it better if this class of object had its own name. I call an object like this a "spime," because an object like this is trackable in space and time.

Why do we need a new word for a concept like that? Because the old words distract our attention.

"Spimes are manufactured objects whose informational support is so overwhelmingly extensive and rich that they are regarded as material instantiations of an immaterial system. Spimes begin and end as data. They're virtual objects first and actual objects second."

Why would we want to do such a weird thing? Mostly so that we can engage with objects better during their lifecycle, from the moment of invention to their decay. That's the technical reason, the design reason: but the real reason would be because of how that would feel.

"The primary advantage of an Internet of Things is that I no longer inventory my possessions inside my own head. They're inventoried through an automagical inventory voodoo, work done far beneath my notice by a host of machines. So I no longer to bother to remember where I put things. Or where I found them. Or how much they cost. And so forth. I just ask. Then I am told with instant real-time accuracy.

"I have an Internet-of-Things with a search engine of things. So I no longer hunt anxiously for my missing shoes in the morning. I just Google them. As long as machines can crunch the complexities, their interfaces make my relationship to objects feel much simpler and more immediate. I am at ease in materiality in a way that people never were before."

That is my visionary thesis. I wrote a little non-fiction book about this prospect last year, where I rattle on about the concept, kinda turning the idea upside down, knocking on it to see what falls out.... It's a small book, but a big topic. Too big for any one thinker.

A neologism, a completely made-up word like 'spime,' is a verbal framing device. It's an attention pointer. I call them "spimes," not because I necessarily expect that coinage to stick, but because I need a single-syllable noun to call attention to the shocking prospect of things that are plannable, trackable, findable, recyclable, uniquely identified and that generate histories.

I also wanted the word to be Google-able. If you Google the word "spime," you find a small company called Spime, and a song by a rock star, but most of the online commentary about spimes necessarily centers around this new idea, because it's a new word and also a new tag. It's turning into what Julian Bleecker calls a "Theory Object," which is an idea which is not just a mental idea or a word, but a cloud of associated commentary and data, that can be passed around from mouse to mouse, and linked-to. Every time I go to an event like this, the word "spime" grows as a Theory Object. A Theory Object is a concept that's accreting attention, and generating visible, searchable, rankable, trackable trails of attention.

The term THEORY OBJECT is itself a kind of a theory object. Any real theory object has probably got trackback. Links. Pictures. Maybe a PowerPoint. A website. An FAQ. Maybe some Flash animation. Maybe it's got a database layer and user-centric graphic web apps. I'm a book author, I am into black ink on white paper. So man, I hate all those things. But this is a tech show. Let's check if the visual hardware is still working....

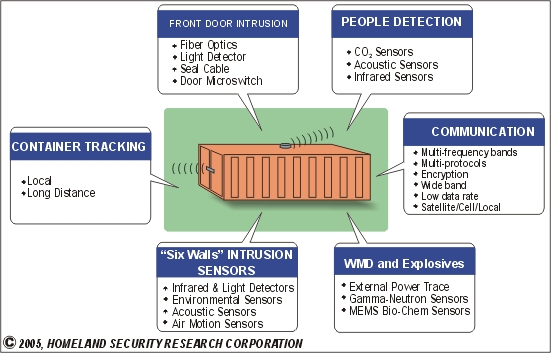

This is what I'm talking about. Not a smart object that computes ubiquitously. I mean the everyday object, the dumbest and cheapest and most obvious thing we have. Except it's got a unique digital identity, so it's trackable, sortable, rankable, findable in space and time. When I put some kind of hot-tag on it or in it, then we have a point of discussion. "Oh, you mean that 'Everyday Object?'" you say. "Well I've got the same one as you, only mine is better." Why? "Because my everyday object is tomorrow's emerging object." Really? No way. "Yes way! I have tomorrow's emerging object 2.0. No really, I can prove that. Just listen to all my friends on the web!" Where'd you get a spime? Oh yeah. From this super Arab-owned-ports high-security spime shipping container.

When they work well, new words like spime are like new brooms. They're good for getting rid of the old words that have turned into dust. New words can sound silly or dangerous, like empty buzzwords, gobbledygook, hype, but this should be understood as the innate nature of language in fast-changing circumstances. Hype is a system-call on your attention. If hype clearly aimed straight at your wallet, you are right to worry. But hype is only bad for you if you drink it unthinkingly, by the barrel and case. If you soberly track its development, hype is very revealing. Even mistaken and obscurantist hype shows that people are stupid and trying to hide something, which are always good things to know.

In politics, the opposite of hype is political reality. Political hype is BS, it's a campaign speech, it's meant to deceive the listener. But in technology, the opposite of hype is not the truth. The opposite of hype in technology is argot. It's techno-jargon. Argot is not reality, jargon is not the truth. Argot is a super-specialized geek cult language that has no traction in the real world. Argot is the deliberately hermetic language of a small knowledge clique. A small clique likely doesn't have enough people in it to successfully name its own inventions and practices, especially when those inventions and practices emerge from their lab and spread widely throughout a general population. It takes a whole lot of people to manage a popular language. If you know how to watch you can see them at work. You can even just join right in. There are growing numbers of people today writing and talking about the Internet of Things.

Here's another commentator discussing the exact problem I have. He's an author named Adam Greenfield who has an interesting new book out called "Everyware: the Dawning Age of Ubiquitous Computing." I could have called my speech "Everyware," like Adam Greenfield does, instead of calling it Internet of Things (like MIT does). Now I'll explain why I didn't choose to do that.

I'll quote from an interview with him now.

The interviewer asks:

"Everyware" has a lot in common with the contemporary discourses of ubiquitous computing, so why coin an entirely new term?

And Greenfield replies:

'Each of the terms already in use – "ubicomp," "pervasive computing," "tangible media," "physical computing," and so on – is contentious. They're associated with one or another viewpoint, institution, funding source, or dominant personality. I wanted people relatively new to these ideas to be able to have a rough container for them, so they could be discussed without anyone getting bogged down in internecine definitional struggles, like "such-and-such a system has a tangible interface, but isn't really ubicomp."

I rather admire his term "everyware." It's certainly more elegant than "ubicomp," which is really a verbal disaster as an English noun, or "ubiquitous computation," which takes forever to say and is also hard to spell. I think that Everyware is somewhat confusing in verbal speech, though, because it's a pun. If you Google the word 'everyware' you find there's already a company named Everyware. My main critique of the term is that I'm not sure it carries enough new freight with it. It's a nicer name for an older grab-bag. Has this word been prematurely optimized? Is this word going to scale upward when we start really understanding this emergent technology?

Adam Greenfield is trying to speak and think very clearly, and to avoid internecine definitional struggles. As a literary guy, though, I think these definitional struggles are a positive force for good. It's a sign of creative health to be bogged down in internecine definitional struggles. It means we have escaped a previous definitional box. For a technologist, the bog is a rather bad place, because it makes it harder to sell the product. In literature, the bog of definitional struggle is the most fertile area. That is what literature IS, in some sense: it's taming reality with words. Literature means that we are trying to use words to figure out what things mean, and how we should feel about that.

So don't destroy the verbal wetlands just because you really like optimized superhighways. New Orleans lost a lot of its mud and wetlands. Eventually, the storm-water rushed in, found no nice mud to bog down in, and came straight up over the levees.

There is no permanent victory condition in language. You can't make a word that is like a steel gear.

Personally, I don't believe that ubiquitous computation, as eventually seen in real life, will turn out to ubiquitous or computation. I don't think it will be "every-ware." I think it's going to be patchy and limited, in much the way that cellphone cells and RFID tags are patchy and limited. I think it's about sorting, tagging, searching, ranking and researching, instead of being some smooth, finished product, like a state-supported Ma Bell universal-access utility.

Time will tell.

In the meantime, you DON'T WANT to avoid the contentiousness and the definitional struggles, exactly BECAUSE they REALLY ARE associated with those viewpoints, institutions, funding sources, and dominant personalities. The words are the signifiers for a clash of sensibilities that really need to clash. You are trying to wallpaper a wall that is still undergoing construction.

Let's take Web 2.0 as a fine example of these word problems. Here we've got the canonical Tim O'Reilly definition of Web 2.0:

"Web 2.0 is the network as platform, spanning all connected devices; Web 2.0 applications are those that make the most of the intrinsic advantages of that platform: delivering software as a continually-updated service that gets better the more people use it, consuming and remixing data from multiple sources, including individual users, while providing their own data and services in a form that allows remixing by others, creating network effects through an 'architecture of participation,' and going beyond the page metaphor of Web 1.0 to deliver rich user experiences."

I think that very long and prolix sentence definitely means something. It's not vacuous or devoid of semantic content. If you're a techie, it's even a kind of emotionally exciting sentence. But if you break this definition down, what you actually find there is a roll-call of social movements: of viewpoints, institutions, funding sources, and dominant personalities. It's about people who Tim considers important, and the stuff they do as a contemporary class of entrepreneurs:

Stuff they do such as:

unintended uses, user contributions, effortless scalability, radical decentralization, customer self-service, mass service of micromarkets, software as a service, right to remix, and architected participation.

Using web-based technologies they are pioneering, such as:

blogs, wikis, voice-over-Internet-Protocol, podcasting, filtering, sharing, social searching, and social bookmarking.

With some nice on-the-ground examples and public accomplishments to point at and praise, such as:

Skype, Wikipedia, KatrinaList, FlickR.

So far, so good. But there's room for verbal improvement.

Now, if you are a professor of the English language who is also highly technically aware, such as Alan Liu, you can find that Tim O'Reilly sentence to be highly problematic. Why? Because it's not language-centric enough. It's too much of a techie banner-ad. It's also too hasty, it is half-baked and lacks historical seasoning; it's not social, economic, political, and cultural in its assessments.

I will quote Alan Liu, from a recent interview he did:

"I am highly skeptical of the 'Web 2.0' hype. There are two reasons for this. One goes back to the issue of history (...). 'Web 2.0' is all about a generation-change in the history of the Web, but from a perspective that is looking at what is happening right now, as opposed to what was happening during the previous generational change (the '1980s'). It's not clear that we can really describe a generation change of this magnitude and complexity while we are in the midst of the change itself, except to say that 'something' is happening that a future generation may decide is qualitatively different. After all, when people speak of Web 2.0, they are actually referring to a swarm of many kinds of new technologies and developments that are not all necessarily proceeding in the same direction (for example, toward decentralization, open content creation and editing, Web-as-service, AJAX, etc.)."

(I think Alan Liu has got a point here. A wagon-train of pioneers on the electronic frontier are not the same thing as a town.)

Alan Liu goes on about the serious semantic problems of simply not knowing what we're talking about, and even tripping up on obvious philosophical contradictions:

"It's not at all certain, for example, that open content platforms in the style of blogs, wikis, and content management systems align with a philosophy of decentralized or distributed control, since many such database- or XML-driven technologies require a priesthood of backend and middleware coders to create the underlying systems and templates for the new 'open' communications. Just how many people in the world, for example, can make one of the current generation of open-source content-management systems (which often start out as blog engines) do anything that isn't on the model of 'post'-and-'category' or chronological posting? Even the more trivial exercise of re-skinning such systems (with a fresh template) requires a level of CSS knowledge that is not natural to the user base."

So what is Alan Liu trying to say here, not particularly clearly? Basically he's saying that in the guise of empowering users through all this participatory fooforaw, Web 2.0 is actually a ploy to return the Internet's technical power to the specialized geek clique that originally built Web 1.0. They stole our revolution, now we're stealing it back. And selling it to Yahoo.

Alan Liu has even more to complain about:

"My second reason for being skeptical about 'Web. 2.0'– at least the hype about it – is more important. I think that people who make a big deal out of Web 2.0 are trying to take a shortcut to get out of needing to understand the real generation changes that are happening in the background and that underlie any change in the Web. Those changes occur in social, economic, political, and cultural institutions.

"Web 2.0 is just a high-tech set of waldo gloves or remote-manipulators that tries to tap into the underlying social and cultural changes, but really requires the complement of disciplined sociological, communicational, cognitive, visual, textual, and other kinds of study that can get us closer to the actual phenomena. (...) I don't think there are many developers of Web 2.0 technologies who have done the hard social and cultural studies to help them think about what they are developing. They make a neat system or interface that only taps into some aspects of the social scene. Then, if there are a lot of hits or users, their system is said to be a paradigm. But it's hit or miss. There is no assurance that such technologies are the real, best, coolest, or even most useful 'face,' 'book,' or 'space' of people – only that they are the face, book, or space allowed to surface through a particular lash-up of technologies."

Of course I have my differences with Alan Liu just like I do with Adam Greenfield. Mostly because I'm a big cyberpunk postmodernist cynic. I don't think there EVER comes any time when we get a full social, economic, political, and cultural assessment of a technology. The telegraph is dead, you can't send a telegram in the United States any more, but the historical jury is still out on what the heck the telegraph and telegram really did to us. One of the best books we've got on the subject is a book called "The Victorian Internet" which just gives up and tries to recast that historical experience with the words we've got now.

I entirely agree with Alan Liu that Web 2.0 hype is a lash-up of technologies that is made in a big hurry by catch-as-catch-can hacker types. That might even be considered a virtue. The deeper problem is that language is a lash-up, too. We don't get a red-hot tech lab separate from a cool and contemplative ivory tower where we can make permanent historical judgments. We'd be kind of lucky nowadays just to get "Theory Objects," which are electronic lash-ups of data, ideas and weird riffing. A "Theory Object" is a kind of hack for English majors.

Why do I go on about this? Because it matters. It's a serious part of the work of emerging technology. It's like naming and christening the baby. It's not the sex or the pregnancy, which create and grow the baby. It's the verbal incantation that turns the baby into a social actor. Now that we gave her a name – "Victoria" – now she is a legal person. Now she can be tagged ranked searched and sorted.

What's the victory condition? It's the reaction of the public. It starts like this: "I've got no idea what he's talking about." Then it goes straight and smoothly through to "Good Lord, not that again, that's the most boring, everyday thing in the world." That's the victory. To make completely new words and concepts that become obvious, everyday and boring.

Enough words for a second, let's try another picture.

Before you start getting all weird and ludic with digital tags for actual objects, you should read Simson Garfinkel, who knows what he's talking about, technically speaking. My book SHAPING THINGS is a cultural manifesto and a visionary polemic. This book, RFID: Applications, Security and Privacy manages to cram a lot of the soothsayers into the same book. So read the manual first, before you get your thumbs blown off. Here's another picture: This RFID film.

I apologize for making you look at so many book covers. This image is more elegant and soothing and designery. And it's threatening, in a lot of interesting ways. We'll leave this image up for a while.

Back to the hard grind of the words!

"ThingLink." This is the Wikipedia, Jimmy-Wales-approved version of everyware and spime. I discovered this term in Switzerland, and I got it from Ulla-Maaria Mutanen, who is from Finland.

I will quote her:

"What is a ThingLink?

"ThingLinks are unique identifiers, ID codes, for things. By labeling products with ThingLinks others can quickly find information about the thing on the net. ThingLinks can be put on physical things like clothing, crafted goods, or places, by using stickers and labels, but they could just as well be used online by putting a ThingLink on your blog post, latest digital home movie, or music piece. When people find a ThingLink they can use a search engine, or an encyclopedia like WikiProducts to find information about the creator or read reviews for example. They can also add their knowledge and opinions by adding a ThingLink to their blog post or write a new wiki page for the thing."

"ThingLinks come in different versions.

"Short ThingLink

This one is suitable for printing on physical labels.

"Long ThingLink

A long ThingLink is used for online things. Anyone can create unlimited numbers of these

links without the risk of accidently getting the same ThingLink as a completely different

thing.

"Special ThingLinks

Many products already have unique ID's, ISBN numbers for books, or EAN and UPC codes for

most commercial products (look under the barcode)."

Ulla-Maaria is an expert on handicrafts and I think she would like to make a private, world-spanning version of ThingLinks. Maybe that could happen, if she could harvest the heaving masses of Wikipedia to do all her unpaid lazyweb labor. I certainly wish her luck. It's a good term, ThingLink, it's modest and punchy and to her point. I happen to think that Wiki is pretty good coinage too. You don't mistake the word "Wiki" for anything else.

Here's another contender from Julian Bleecker, who happens to be attending this event. He just wrote a manifesto about his concept, which I enjoyed very much.

There we go, folks: a picture of an online manifesto. Man, that's the kind of graphic design that gets an author's heart racing.

"Blogjects" – objects which emit data about their use.

Julian, as I mentioned before, is very into Theory Objects, so what he's seeing here in the blogject is an object capable of provoking web discussions. A blogging object, a blog-ject, is a kind of highly conversational conversation piece. They contribute to discourse, not because they are smart and can talk all by themselves, but because they spew up information that can make a difference in the physical world and get some trackback.

I think this blogject notion might catch on. It's "semantically legible" as Julian says, because we mostly know what blogs are and what objects are. Hooking up an object to a blog is something most any serious media-design student could do right now. A spime is something thirty years from now, but a blogject is like a university lab project. You could pull down a grant for one and make one pronto, then see if you could get anybody to blog it. I blogged that Blogject manifesto right away, so a blogject is already a kind of Theory Object. If people started making blogjects in real life, I'd get real interested. I'd probably write 'em up in MAKE magazine.

Now, I consider a spime to be much like a blogject, but a spime is inside out, or maybe top-down: a spime is more like a blog that emits objects. As I said earlier, "Spimes are manufactured objects whose informational support is so overwhelmingly extensive and rich that they are regarded as material instantiations of an immaterial system. Spimes begin and end as data." So if you had a blogject, and it somehow created a really huge, popular blog, wouldn't a natural part of that blog be a way to make that blogject? It's the future version of today's MAKE magazine open-source recipes for building things at home. If you're reading my blogject's blog, about how to put videocameras on pigeons (let's say), then of course I'll tell you how to put videocameras on pigeons. Sure, hit my Paypal button and I'll fab it out and ship it, then we'll track it together and we'll all share the data as collective intelligence. Then Tim will get me to write a hacks book! We'll all be happy!

Some other similar terms of art I have come across lately:

- UFOs: Ubiquitous Findable Objects. I don't believe in UFOs, they sound too much like science fiction.

- EKOs: Evocative Knowledge Objects. Just another acronym. Acronyms are the small-change of the tech world.

- Mobile social software.

- Mobile appliances, automatic networks.

The network support system for spimes, blogjects and ThingLinks is probably some kind of handheld networked interface. A descendant of today's cellphones, most likely. You enter a cell full of linked, blogging things, and you wave your detector around: are they turned on, are they powered up, what's the story here? What do you call a handheld interface for real, actual objects? "A wand."

An Internet of Things. An Ecology of Things. Those were the names given to classes I was teaching at Art Center College of Design in California last year.

"Object hyperlinking." That sound pretty accurate, but I always wonder about the lifespan of terms like "hyper, super, ultra," and "post." Ultra compared to what? Why not just say thinglinks?

Here are some fabjects.

These things are pretty hyper. They're hyper, super and ultra. But compared to what? They're nothing compared to what's coming. There are four or five different names just for the devices that make these objects. Rapid prototype machines, fabricators, 3-D printers, laminators, fabs... We shouldn't call them "super" anything because these are babies, they are weak and frail emergent little things, with big appetites.

- Locative media.

- The geospatial web.

- Context-aware computing.

- Ambient computing.

- Physical computing.

You can just list these overlapping synonyms and you can literally HEAR technology emerging.

The infocloud.

The bluesphere (which is the area of coverage where Bluetoothed objects can see each

other).

Data shadows. This is the data cast off by objects.

Some nice adjectives:

ubiquitous, pervasive, invisible, embedded, continuous, ambient, physical, tangible.

A verb:

to instantiate. An instantiation, a term which comes from computer programming

and is now in a position to be applied to physical objects.

Spychips – this is the pejorative and the term of abuse. "Spychips" is the term of art guaranteed to provoke a fight. It's morally wrong to avoid controversies just because you don't want anybody confronting you over what you are doing. There's something snotty about an author who expects only good reviews for his books. The author of an emergent technology is in the same boat. If nobody is dismissing you as hype, then you are not being loud enough. If nobody thinks what you are doing is dangerous, you are doing something that has no power to change the world. You'd better fight it out with words before you fight with laws. You're gonna be in no position to think straight when you suddenly get hauled in front of Congress and confronted for being "evil." You need to feed the critics. Don't feed the crazy ones, but a loyal opposition is hugely valuable. What kind of people go "Yeah brother!" when I talk this way and show these pictures? They are a class of people who don't have a proper name, either. Hackers? That word is way too old, and besides, that word means a criminal now. Alpha geeks, wranglers, protocrats? Makers? Technorati? Blogerati? What? What to call them? Will they name themselves, or will someone else name them? What is their legacy?

You know what you're seeing here? The legacy of the past. This is the emerging tech of the past: receding tech. Receding tech, decaying tech, is still polluting us. These are the silent, dead things that still occupy our space and time. Things that don't blog. Things that don't link. Things that don't evoke any knowledge. Non-spiming dead things.

The future I'm describing is a visionary future. The status quo is THIS. This is the primordial soup of passive thing-ness. This is the STALE primordial soup. It flakes off and rots in our biosphere, and it gets soaked into our bodies. We are burying all that is dead – within US.

We need a way out of the dump. There are ways out. But we don't have words for them yet. That's all we've got time for, and then some. Thanks for your attention tonight.